STAR-CCM+ Installations on Tetralith & Sigma

Official homepage: https://mdx.plm.automation.siemens.com/star-ccm-plus

Star-CCM+ is a CFD solver which is developed and distributed by Siemens/CD-adapco. It has initially been designed to understand the flow characteristics around obstacles and inside fluidic system. The software has emerged as a tool for coupled multiphysics simulations. It is a commercial software that you shall purchase the license for making use of it.

Please contact if you have any questions or problems.

Star-CCM+ is a commercial software, that requires a license. NSC only provides the maintenance of the installation, without any support on license purchase.

Each STAR-CCM+ version contains a directory with documentation and examples, which is located at: $STARCCM_ROOT/doc. The environmental variable $STARCCM_ROOT is available, after you have loaded a STAR-CCM+ module.

The documentation includes two main components:

- The userguide, e.g. UserGuide_13.04.pdf

- Several examples that are discussed in the examples. The examples can be found in the directory $STARCCM_ROOT/doc/startutorialsdata

| Version | NSC Module | Numerical Precision |

|---|---|---|

| 2310 | star-ccm+/2310 | double precision |

| 2310 | star-ccm+/2310-mixed-precision | mixed precision |

| 2306 | star-ccm+/2306 | double precision |

| 2306 | star-ccm+/2306-mixed-precision | mixed precision |

| 2302 | star-ccm+/2302 | double precision |

| 2302 | star-ccm+/2302-mixed-precision | mixed precision |

| 2210 | star-ccm+/2210 | double precision |

| 2210 | star-ccm+/2210-mixed-precision | mixed precision |

| 2206 | star-ccm+/2206 | double precision |

| 2206 | star-ccm+/2206-mixed-precision | mixed precision |

| 2022.1 | star-ccm+/2022.1 | double precision |

| 2022.1 | star-ccm+/2022.1-mixed-precision | mixed precision |

| 2021.3 | star-ccm+/2021.3 | double precision |

| 2021.3 | star-ccm+/2021.3-mixed-precision | mixed precision |

| 2021.1 | star-ccm+/2021.1 | double precision |

| 2021.1 | star-ccm+/2021.1-mixed-precision | mixed precision |

| 2020.3 | star-ccm+/2020.3 | double precision |

| 2020.3 | star-ccm+/2020.3-mixed-precision | mixed precision |

| 2020.2 | star-ccm+/2020.2 | double precision |

| 2020.2 | star-ccm+/2020.2-mixed-precision | mixed precision |

| 2020.1 | star-ccm+/2020.1 | double precision |

| 2020.1 | star-ccm+/2020.1-mixed-precision | mixed precision |

| 2019.3.1 | star-ccm+/2019.3.1 | double precision |

| 2019.3.1 | star-ccm+/2019.3.1-mixed-precision | mixed precision |

| 2019.2 | star-ccm+/2019.2 | double precision |

| 2019.2 | star-ccm+/2019.2-mixed-precision | mixed precision |

| 2019.1.1 | star-ccm+/2019.1.1 | double precision |

| 2019.1.1 | star-ccm+/2019.1.1-mixed-precision | mixed precision |

| 13.06.011 | star-ccm+/13.06.011 | double precision |

| 13.06.011 | star-ccm+/13.06.011-mixed-precision | mixed precision |

| 13.04.011 | star-ccm+/13.04.011 | double precision |

| 13.04.010 | star-ccm+/13.04.010 | double precision |

| 12.06.010 | star-ccm+/12.06.010 | double precision |

| 12.04.011 | star-ccm+/12.04.011 | double precision |

Please note that STAR-CCM+ has changed its version numbering system from 2019 on. We follow this official numbering scheme. The internal numbering for the 2019 version is still e.g. 14.02.012, which you will find in the absolute paths on tetralith.

Double Precision/Mixed Precision Versions

STAR-CCM+ offers double precision and so called mixed precision versions. To our understanding, the mixed precision version uses mainly single precision arithmethic, with a few subroutines/variables that are in double precision accuracy. Accordingly, the mixed precision version is potentially less accurate, but uses less memory and can be faster. We generally recommend to use the double precision version. The mixed precision version should only be used, if you are exactly aware of the accuracy of the results and all its implications.

Load the Star-CCM+ module corresponding to the version you want to use, e.g

module load star-ccm+/2020.3That loads the Star-CCM+ package version 2020.3. The software is started by the command

starccm+Computations are typically submitted within a slurm batch script, see "Example Batch Script". To set up the model, to define boundary conditions and other physical parameter and to visualize the result, one typically uses the graphical interface of STAR-CCM+.

Specification of MPI and Network Fabric

When running in parallel, you have to specify properties for Intel MPI and Intel Omnipath. Note, that the MPI options are different for specific versions of STAR-CCM+. Add the following options to your starccm+ launch command:

| Version | MPI Options |

|---|---|

| 13.04.010 and newer | -mpi intel -mpiflags "-bootstrap slurm" -fabric psm2 |

| 12.06.010 and older | -mpi intel -mpiflags "-bootstrap slurm -env I_MPI_FABRICS shm:tmi" |

For additional MPI debug information, add the following to mpiflags: -env I_MPI_DEBUG 5

Example Batch Script

To run Star-CCM+ in batch mode, it has to be submitted via a SLURM batch script.

#!/bin/bash

#SBATCH -n 4

#SBATCH -t 00:20:00

#SBATCH -J jobname

#SBATCH -A SNIC-xxx-yyy

module load star-ccm+/2020.3

NODEFILE=hostlist.$SLURM_JOB_ID

hostlist -e $SLURM_JOB_NODELIST > $NODEFILE

starccm+ \

-np $SLURM_NPROCS \

-machinefile $NODEFILE \

-mpi intel -mpiflags "-bootstrap slurm" -fabric psm2 \

-power -podkey <YOUR LICENCE KEY> -licpath 1999@flex.cd-adapco.com \

-rsh jobsh \

-batch testcase.sim

# Cleanup

rm $NODEFILESubmit the script via: sbatch <script file>

You have to adjust the batch script as follows:

Specify the number of cores via the option #SBATCH -n<cores>

Specify the required time #SBATCH -t <time>

Change the jobname #SBATCH -J <job name>

Specify your account name #SBATCH -A <account name>

Replace <YOUR LICENCE KEY> with your power on demand licence key

Adjust the name of your testcase (testcase.sim)

If you use an additional java script to control the execution of Star-CCM+, you have to add the name of the script, e.g. -batch testcase.java testcase.sim

Basic Parallel Test

To test if the basic MPI communication works properly in STAR-CCM+, one can use a build in test case, named "-mpitest". One should use more than one node, to test the communication between nodes, e.g. "interactive -n4 --tasks-per-node=2 -A <YOUR ACCOUNT NAME>" to use 4 tasks, distributed on two nodes.

NODEFILE=hostlist.$SLURM_JOB_ID

hostlist -e $SLURM_JOB_NODELIST > $NODEFILE

Version 13.04.010, 13.04.011, 13.06.011, 2019, 2020, 2021:

starccm+ -np $SLURM_NPROCS -machinefile $NODEFILE -mpi intel -mpiflags "-bootstrap slurm -env I_MPI_DEBUG 5" -fabric psm2

-power -podkey <YOUR LICENSE KEY> -licpath 1999@flex.cd-adapco.com -rsh jobsh -mpitest

Version 12.04.011, 12.06.010:

starccm+ -np $SLURM_NPROCS -machinefile $NODEFILE -mpi intel -mpiflags "-bootstrap slurm -env I_MPI_FABRICS shm:tmi -env I_MPI_DEBUG 5"

-power -podkey <YOUR LICENSE KEY> -licpath 1999@flex.cd-adapco.com -rsh jobsh -mpitestWhen running the STAR-CCM+ GUI, NSC recommends using ThinLinc to access Tetralith.

For more information on how to use ThinLinc, please see: Running graphical applications using ThinLinc ThinLinc is avaible for all platforms (Macintosh, Windows, Linux).

To run STAR-CCM+ interactively, we recommend to use hardware accelerated graphics. It is available on GPU nodes and on the login nodes of Tetralith. To make use of the graphics card, you must start STAR-CCM+ in a certain way. From STAR-CCM+ version 2019.3.1 onward, one has to specify an additional argument to detect the graphics card properly, namely the option -graphics native.

Command to launch STAR-CCM+ with GPU support, STAR-CCM+ Version 12.04 up to 2019.2:

vglrun starccm+ -clientldpreload /usr/lib64/libvglfaker.soCommand to launch STAR-CCM+ with GPU support, STAR-CCM+ Version 2019.3.1 and newer:

vglrun starccm+ -clientldpreload /usr/lib64/libvglfaker.so -graphics nativeIf you do not use vglrun, or if you do not include "-clientldpreload" or "-graphics native" properly, STAR-CCM+ will only run with software rendering. We found that some versions of STAR-CCM+ display the scene for the geometry, mesh etc. in a wrong way, if you start it differently. For example, the direction of the coordinate system may be inverted, text is displayed up side down, etc. Further, the navigation of the scene will be very slow. On a compute node without grahics card, the rendering may be only 3-5 frames per second. With GPU, 40-50 frames per second can be achived using vglrun.

Simulation files of the size 20-30GB and larger, may cause problems when they are loaded into the interactive GUI. In particular, it may not be possible to save the simulation file (*.sim), as STAR-CCM+ crashes while saving the file. This seems to be an internal memory problem. In this case, the simulation file is incomplete and corrupted. In order to circumvent this memory problem, you can interactively run STAR-CCM+ in parallel. There are two ways to run STAR-CCM+ interactively in parallel mode:

- Run the GUI on a login node, while STAR-CCM+ runs in parallel on a compute node (preferred method)

- Run STAR-CCM+ in parallel on a login node

1. Run the GUI on a login node, while STAR-CCM+ runs in parallel on a compute node

The best is to run STAR-CCM+ interactively on a login node, using vglrun, while the data intensive operations are executed on a compute node. This way, you experience fast rendering of the graphics and you can make use of the full memory and parallel execution on a compute node. This way you avoid conflicts with other users and limitations of resources of the login nodes.

- Create an interactive session on a compute node : interactive -N1 -A <account>

- When logged into a compute node, the ID number of the current compute node will be shown, e.g. <UserName>@n330

In a second terminal, start STAR-CCM+ on a login node:

vglrun starccm+ -clientldpreload /usr/lib64/libvglfaker.so -mpi intel -fabric psm2 -on <compute nodeID>:<number of cores>For example, to run STAR-CCM+ with 4 cores on the compute node n330, where your interactive session is running:

vglrun starccm+ -clientldpreload /usr/lib64/libvglfaker.so -mpi intel -fabric psm2 -on n330:4

Remarks:

STAR-CCM+ will automtically be launched on the compute node. You do not have to start STAR-CCM+ on the compute node by yourself, but only on the login node.

It only works, if you have created an interactive session on a compute node BEFORE you start STAR-CCM+ on the login node, since STAR-CCM+ connects to that compute node.

When loading your simulation file (File->Load), STAR-CCM+ automatically chooses the Process Options: Parallel on Named Hosts. The name of the Host and the number of Processes are already filled out, since we provide the options via the command line.

2. Run STAR-CCM+ in parallel on a login node

Some users may have a low priority to start an interactive session, which can result in a long waiting time. In this case, you can also try to run STAR-CCM+ in parallel on a login node. This should only be used when you experience problems with larger datasets of the size 20-30GB. For even larger datasets, the login nodes are not appropriate, as you share the resources with many other users. The number of cores should be limited to a minimum, for example two cores.

How to start STAR-CCM+ on a login node, using vglrun and in parallel:

vglrun starccm+ -clientldpreload /usr/lib64/libvglfaker.so -mpi intel -fabric psm2 -np 2The easiest way is to mention the required options via the command line, as shown. Alternatively, you can choose the options manually when loading your simulation file (*.sim)

File->Load->"Load a File" Dialogue:

Process Options: Parallel on Local Host

Compute Processes: 2

Command: -mpi intel -fabric psm2 (needs to be included)The option: -mpiflags "-bootstrap slurm" must not be included on the login node.

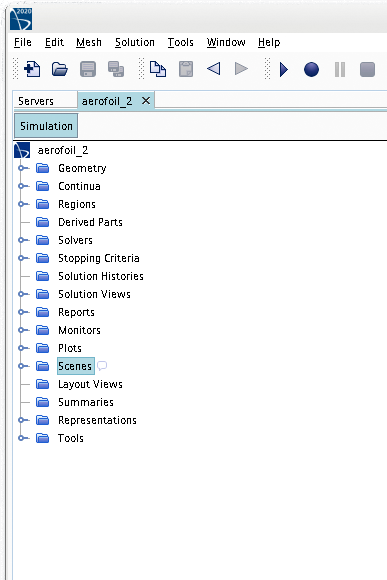

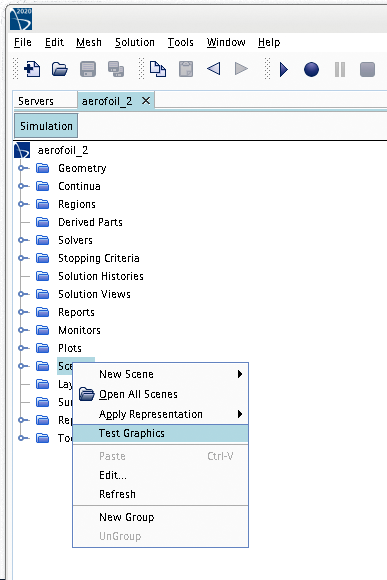

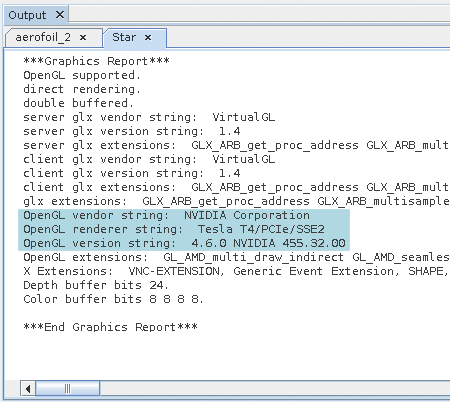

You can check, if the GUI of STAR-CCM+ uses the graphics card correctly:

- Load the simulation

- In the "Simulation" view, Right-Click on the "Scenes" folder, which will display a new menue

- Choose "Test Graphics" from the menue (Left-Click)

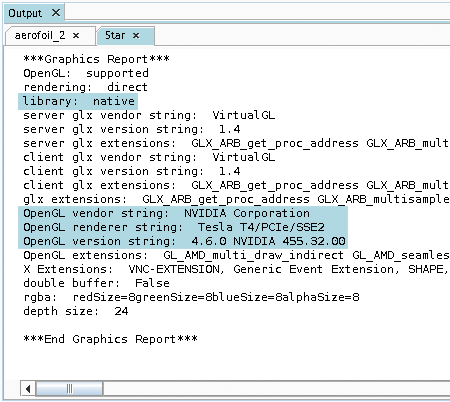

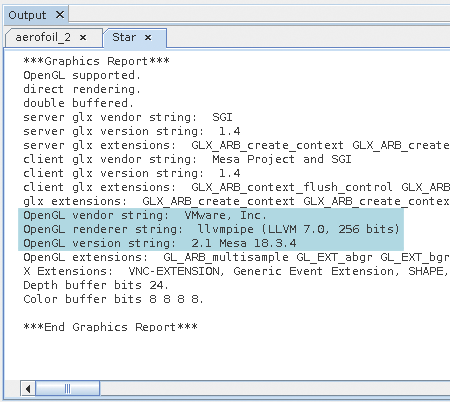

In the "Output" section, a new tabular will be added that contains a "Graphics Report":

GPU supported:

- OpenGL vendor string: NVIDIA cooporation

- OpenGL rendering string: The name of the graphics card, e.g. Tesla T4 or GTX 1060

- OpenGL version string: version of the NVIDIA driver

GPU not supported, software rendering:

- OpenGL vendor string: VMware Inc.

- OpenGL rendering string: llvmpipe

- OpenGL version string: Mesa version

The following images show again where to find the Test Graphics option, as well as the Graphics Report for different versions of STAR-CCM+

>> Images: How to check GPU support (click for details)

| Select Scenes | Test Graphics |

|

|

| Graphics Report without GPU (STAR-CCM+ 2020.3) |

Graphics Report with GPU (STAR-CCM+ 2020.3) |

|

|

| Graphics Report without GPU (STAR-CCM+ 12.04) |

Graphics Report with GPU (STAR-CCM+ 12.04) |

|

|

User Area

User Area