Talks at the Berzelius AI Symposium

Listed in alphabetical order based on the speaker's last name.

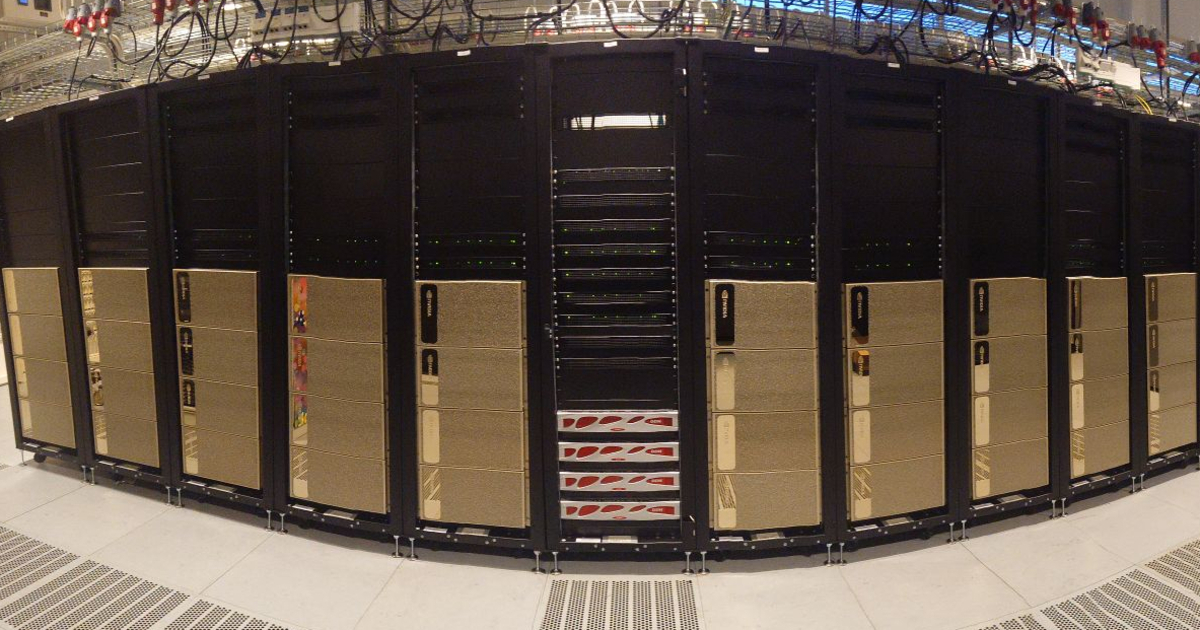

AI supercomputing for drug discovery

The time and costs associated with developing new drugs has risen dramatically. We need innovative new approaches more than ever. We are seeing promising breakthroughs: novel methods for molecular generation, genomics-at-scale, high accuracy protein folding prediction. But these methods are extremely computationally intensive, at a time when Moore's Law is finally failing. As the world leader in accelerated computing, NVIDIA is uniquely well placed to understand the future applications of AI supercomputing in drug discovery. This talk will describe the types of collaborations we are undertaking with partners like AstraZeneca and GlaxoSmithKline, and highlight where compute-as-scale has an invaluable role to play in the future of the field.

Rob Brisk, Global lead for life science alliances at NVIDIA

Rob spent over a decade working as a hospital doctor in the NHS, specialising latterly in adult cardiology. He has a parallel background in computer science and AI, and believes deeply in the power of digital innovation to transformer healthcare for the 21st century. At NVIDIA, Rob has the privilege of leading collaborations with world leaders in clinical care and drug discovery, which includes overseeing the scientific project pipeline for the Cambridge-1 supercomputer.

Learning across 16 million patients: Big data and HPC in healthcare

The London AI Centre partner hospitals provide care to 1⁄4th of the UK population. King’s College London, jointly with Imperial College and Queen Mary University of London, are building an AI and big-data infrastructure across 10 hospital trusts to deliver the vision of AI-enabled care. The infrastructure, built with privacy in mind, enables all clinical data (i.e. imaging, genetics, blood, pharmacy, interventions, medical decisions, costs) to be used for research, clinical and operational purposes, allowing algorithms to be trained on clinical data without the data ever leaving the hospital. In order to achieve this, we have built a large computing infrastructure within each hospital with the help of NVIDIA, and a centralised orchestration system to enable federated learning across multiple hospitals. This infrastructure has great potential for real-world impact, not only because it will enable access to data, but also because it aims to bring together large companies and early-stage start-ups (Industry), clinicians (hospitals), and scientists (university) into the same ecosystem.

Jorge Cardoso, Senior Lecturer in Artificial Medical Intelligence at King’s College London

M Jorge Cardoso is Senior Lecturer in Artificial Medical Intelligence at King’s College London, where he leads a research portfolio on big data analytics, quantitative radiology and value-based healthcare. Jorge is also the CTO of the new London Medical Imaging and AI Centre for Value-based Healthcare. He has more than 12 years expertise in advanced image analysis, big data, and artificial intelligence, and co-leads the development of project MONAI, a deep-learning platform for artificial intelligence in medical imaging. He is also a founder of BrainMiner and Elaitra, two medtech startups aiming improve neurological care and breast cancer diagnosis, respectively.

Synthesizing high resolution medical volumes using 3D GANs

In this presentation I will show how 3D generative adversarial networks can be used to synthesize realistic medical volumes. As it is more challenging to share medical images, due to ethics and GDPR, synthetic volumes may be a solution to create large training datasets in medical imaging. This may be necessary to accelerate deep learning research in medical imaging, where a major hurdle is access to large datasets.

Anders Eklund, Associate Professor in Neuroimaging at LiU

Anders Eklund is an associate professor at LiU who works on deep learning, image processing, statistics and high performance computing for medical imaging data, especially brain images. Current research includes synthetic images, image segmentation and fusion of imaging and non-imaging data. He has used CUDA since 2008 and is now eager to fully take advantage of Berzelius.

Learning vision is easy?

The visual cortex is a major area in the human brain and the majority of input from the environment is perceived by vision. Still, it was believed for decades that computer vision is a simple problem that can be addressed by student projects or clever system design based on concepts from related fields. About a decade ago, the field observed a major breakthrough with the advent of deep learning, to a large extent enabled by means of novel computational resources in form of GPUs. Since then, the field basically exploded and thousands of black-box approaches to solve vision problems have been developed. However, it turned out that previously known concepts such as uncertainty and geometry are still relevant when we want to better understand deep learning methods and achieve state of the art performance.

Michael Felsberg, Professor in Computer Vision at LiU

PhD in Computer Vision 2002, Kiel University. Since then affiliated with LiU. Docent 2005, full Professor 2008. Several awards, e.g. Olympus award 2005, two paper awards in 2021, and mentioned as highest ranked AI researcher in Sweden in Vinnovas report 2018. Over 20.000 citations, area chair for e.g. CVPR, ECCV, and BMVC. 12 graduated PhD students. Research focus: Machine learning for machine perception.

Major AI Initiatives in Sweden and in Europe

The purpose of this talk is to give an overview of the AI research, innovation and education ecosystem in Sweden as well as some of the major European AI initiatives such as the four ICT-48 networks of AI research excellence centers and the AI, Data and Robotics Partnership. Two initiatives that will be high-lighted are the newly started Wallenberg AI and Transformative Technologies Education Development Program (WASP-ED) and the TAILOR ICT-48 network on the scientific foundations of Trustworthy AI.

Fredrik Heintz, Professor of Computer Science at LiU

Professor of Computer Science at Linköping University, where he leads the Reasoning and Learning lab. His research focus is artificial intelligence especially Trustworthy AI and the intersection between machine reasoning and machine learning. Director of the Wallenberg AI and Transformative Technologies Education Development Program (WASP-ED), Director of the Graduate School of the Wallenberg AI, Autonomous Systems and Software Program (WASP), Coordinator of the TAILOR ICT-48 network developing the scientific foundations of Trustworthy AI, and President of the Swedish AI Society. Fellow of the Royal Swedish Academy of Engineering Sciences (IVA).

SciLifeLab-KAW Data-Driven Life Science (DDLS) program: the promise of a national program at the launch phase

Life science has become increasingly data-centric, data-dependent and data-driven. There is an exponential growth of data in terms of quantity and complexity. The importance of data has been further emphasized by the pandemic and the quest for real-time access to Covid-19 research data and the growing need to make rapid data-dependent informed decisions. The SciLifeLab and Wallenberg National Program on Data-Driven Life Science (DDLS) was launched in 2021 with a mission to recruit and train the next-generation of computational life scientists, create national data handling capabilities and collaborative research programs in four areas of data-driven life science, i) molecular and cellular biology, ii) biodiversity and evolution, iii) biology and epidemiology of infection and iv) precision medicine and diagnostics. The program is funded by the Knut and Alice Wallenberg (KAW) foundation with up to 3,2 BSEK over 11 years and is coordinated by the National Life Science Infrastructure SciLifeLab. The program will engage 11 partners (KTH, KI, SU, UU, UmU, SLU, LiU, CHT, GU, LU, and NRM), recruit some 40 new group leaders and train 100s of PhD students and postdocs, contribute to national data handling infrastructure and FAIR practices and to data analysis capabilities as well as collaborate with the WASP program and with industry. This presentation will describe the DDLS program and provide examples on recent data-driven life science breakthroughs.

Olli Kallioniemi, Director of the Science for Life Laboratory and Professor of Molecular Precision Medicine at KI

Olli Kallioniemi, M.D., Ph.D. is director of the Science for Life Laboratory, a national infrastructure for life sciences in Sweden and also a professor in Molecular Precision Medicine at the Karolinska Institutet. He directs a major new national SciLifeLab program on Data-Driven Life Science (DDLS) funded by the Knut and Alice Wallenberg Foundation over a 12-year period.

Olli Kallioniemi’s research focusses in functional and systems medicine of cancer, with a focus on laboratory and data-driven approaches for improving the diagnostics and therapy of acute leukemias, ovarian and prostate cancer. Olli Kallioniemi is an author of 400 publications in PubMed and has 21 issued patents. He has supervised 27 doctoral theses and 30 postdocs.

Learning compositional, structured, and interpretable models of the world

Despite their fantastic achievements in fields such as Computer Vision and Natural Language Processing, state-of-the-art Deep Learning approaches differ from human cognition in fundamental ways. While humans can learn new concepts from just a single or few examples, and effortlessly extrapolate new knowledge from concepts learned in other contexts, Deep Learning architectures generally rely on large amounts of data for their learning. Moreover, while humans can make use of contextual knowledge of e.g. laws of nature and insights into how others reason, this is highly non-trivial for a regular Deep Learning algorithm. There are indeed plenty of applications where estimation accuracy is central, where regular Deep Learning architectures are purposeful. However, models that learn in a more human-like manner have the potential to be more adaptable to new situations, more data efficient and also more interpretable to humans - a desirable property for Intelligence Augmentation applications with a human in the loop, e.g. medical decision support systems or social robots. In this talk I will highlight projects in my group where we explore object affordances, disentanglement, multimodality, and cause-effect representations to accomplish compositional, structured, and interpretable models of the world.

Hedvig Kjellström, Professor at KTH

Hedvig Kjellström is a Professor in the Division of Robotics, Perception and Learning at KTH Royal Institute of Technology, Sweden. She is also a Principal AI Scientist at Silo AI, Sweden and an affiliated researcher in the Max Planck Institute for Intelligent Systems, Germany. Her research focuses on methods for enabling artificial agents to interpret human and animal behavior. These ideas are applied in the study of human aesthetic bodily expressions such as in music and dance, modeling and interpreting human communicative behavior, the understanding of animal behavior and cognition, and intelligence amplification - AI systems that collaborate with and help humans. Hedvig has received several prizes for her research, including the 2010 Koenderink Prize for fundamental contributions in Computer Vision. She has written around 100 papers in the fields of Computer Vision, Machine Learning, Robotics, Information Fusion, Cognitive Science, Speech, and Human-Computer Interaction. She is mostly active within Computer Vision, where she is an Associate Editor for IEEE TPAMI and regularly serves as Area Chair for the major conferences.

FAIR life science data on Berzelius

In life science, the data analyzed comes from biological systems that are often difficult to study for many reasons. These systems are for example often complex, open, noisy, spanning widely different spatiotemporal scales, and governed by nonlinear dynamical processes. Life science is now going through a transformative period, thanks to the combination of rapidly advancing measurement technologies, powerful new compute resources and strong bioinformatics algorithm development that utilizes the new computer architectures, enabling us to analyze the underlying biological systems. But one particularly important component in this progress has been the data sharing that has been developed by the life science community for decades, leading to the recent principles for Open Science and FAIR data. The Berzelius system now provides Swedish researchers with unparalleled compute capacity for AI/ML, and in this presentation, I will discuss how life science data will come to Berzelius for analysis and how the results will be shared.

Johan Rung, Head of SciLifeLab Data Centre

Johan Rung, PhD in Engineering Physics and working with bioinformatics since 25 years, is Head of SciLifeLab Data Centre which provides scientific and technical services for life science data for the SciLifeLab infrastructure platforms and the Data-Driven Life Science research program. His own research has focused on human genomics and transcription regulation, with studies on for example type 2 diabetes, skeletal muscle metabolism, and kidney cancer. He has held researcher positions at McGill University in Montreal and the European Bioinformatics Institute in Hinxton, UK, before returning to Sweden and starting the SciLifeLab Data Centre in 2016.

Large-scale language models for Swedish

Large-scale language models have revolutionized the field of natural language processing (and AI in general) during the last couple of years. This talk provides a brief overview over the current development in this area, and presents our ongoing work to build large-scale language models for Swedish using Berzelius.

Magnus Sahlgren, Head of research, NLU at AI Sweden

Magnus Sahlgren, Ph.D. in computational linguistics, is currently head of research for natural language understanding at AI Sweden. His research is situated at the intersection between NLP, AI, and machine learning, and focuses specifically on questions about how computers can learn and understand languages. Magnus is mostly known for his work on distributional semantics and word embeddings, and has previously held positions at RISE (Research Institutes of Sweden), the Swedish Defense Research Agency, and founded the language technology company Gavagai AB.

Artificial Intelligence, Computational Fluid Dynamics and Sustainability

The advent of new powerful deep neural networks (DNNs) has fostered their application in a wide range of research areas, including more recently in fluid mechanics. In this presentation, we will cover some of the fundamentals of deep learning applied to computational fluid dynamics (CFD). Furthermore, we explore the capabilities of DNNs to perform various predictions in turbulent flows: we will use convolutional neural networks (CNNs) for non-intrusive sensing, i.e. to predict the flow in a turbulent open channel based on quantities measured at the wall. We show that it is possible to obtain very good flow predictions, outperforming traditional linear models, and we showcase the potential of transfer learning between friction Reynolds numbers of 180 and 550. These non-intrusive sensing models will play an important role in applications related to closed-loop control of wall-bounded turbulence. We also draw relevant connections between the development of AI and the achievement of the 17 Sustainable Development Goals of the United Nations.

Ricardo Vinuesa, Associate Professor at KTH

Dr. Ricardo Vinuesa is an Associate Professor at the Department of Engineering Mechanics, at KTH Royal Institute of Technology in Stockholm. He is also a Researcher at the AI Sustainability Center in Stockholm and Vice Director of the KTH Digitalization Platform. He received his PhD in Mechanical and Aerospace Engineering from the Illinois Institute of Technology in Chicago. His research combines numerical simulations and data-driven methods to understand and model complex wall-bounded turbulent flows, such as the boundary layers developing around wings, obstacles, or the flow through ducted geometries. Dr. Vinuesa’s research is funded by the Swedish Research Council (VR) and the Swedish e-Science Research Centre (SeRC). He has also received the Göran Gustafsson Award for Young Researchers.

The AI revolution in structural biology

Protein structure prediction is a classical problem in structure biology: Given the building blocks of the protein, the protein sequence, the protein adopts a unique 3D structure to perform its function. The protein sequence is easy to get experimentally but the 3D structure (i.e. the x, y, z coordinates for each atom in the structure > 10,000 atoms) requires hard work and can takes years to determine experimentally. Thus, having methods that from sequence can predict the 3D structure would revolutionize structural biology. During the last years DeepMind (a Google owned company) have been working on this problem using AI, and last year they presented a solution that demonstrated unprecedented performance - AlphaFold. AlphaFold is an end-to-end method that is able to take the protein sequence as input and generated 3D coordinates of all atoms in a conformation that is often close to the coordinates would take years to obtain experimentally. This summer, DeepMind released AlphaFold open source, making the tool available to everyone. The AI revolution in structural biology had begun. In the talk I will focus on the technical aspects of AlphaFold and how we have used Berzelius to understand and also improve certain aspects of AlphaFold.

Björn Wallner, Professor in Bioinformatics at Linköping University

Björn Wallner studies protein structure, dynamics and their connection to protein function using computational methods. He has more the 20 years of experience using machine learning in bioinformatics and has developed several methods that are state-of-the-art in the field. Recent claim of fame: cited in the AlphaFold2 paper and cited in the abstract of AlphaFold-multimer paper.

An overview of the Wallenberg AI, Autonomous Systems and Software Program (WASP)

This presentation will take its starting point in the underpinning trends in society that motivated the initiation of the WASP program in 2015. WASP is the largest individual research program in the Swedish funding history with a total budget of 5.5 BSEK over 15 years. The ambition of the program is to graduate 600 PhDs and to establish 80 new research groups in the WASP domain. A clear focus of a large fraction of the WASP projects is the development and use of machine learning approaches. In view of this, access to large scale resources tailored to AI applications is of highest possible importance to WASP. During 2021 WASP has initiated a number of new instruments such as the NESTs program and collaborations with the Data-Driven Life Science (DDLS) program. The presentation will conclude with an overview of these instruments and their current status.

Anders Ynnerman, Director of WASP and Professor of Scientific Visualization at Linköping University

Professor Anders Ynnerman holds the chair in scientific visualization at Linköping University and is the director of the Wallenberg AI, Autonomous Systems and Software Program. He also directs the Norrköping Visualization Center C. His research focuses is on visualization of large and complex data with applications in a wide range of areas including biomedical research and astronomy. Ynnerman is a member of the Swedish Royal Academy of Engineering Sciences and the Royal Swedish Academy of Sciences. In 2017 he was honoured with the King’s medal for his contributions to science and in 2018 he received the IEEE VGTC technical achievement award.

User Area

User Area