Berzelius

Berzelius is the premier AI/ML cluster at NSC. It was donated to NSC by the Knut and Alice Wallenberg foundation in 2020 and it was installed in the spring of 2021. It was expanded in 2023 and again in 2025 by donations from KAW. It is used for research by Swedish academic research groups.

Access for projects to Berzelius is granted by NSC via

an application process in the project repository SUPR.

Berzelius is open for project applications from Swedish Academic researchers as described

more closely on Resource Allocations on Berzelius.

- Access to a certain type of compute nodes (e.g. Berzelius-Ampere) doesn’t automatically gurantee access to the other kind of compute nodes (e.g. Berzelius-Hopper).

- If a PI already have an allocation on Berzelius-Ampere and would like to access Berzelius-Hopper, we encourage the PI to submit a continuation proposal requesting both instead of a new project proposal for access to Berzelius-Hopper. It can be done by cloning an existing proposal, instead of waiting until the project end date.

- Please note that Berzelius-Hopper is a smaller resource compared to Berzelius-Ampere with shared storage, and we therefore cannot guarantee approval of a large number of proposals.

Compute resources are allocated via the SLURM resource manager. User access to the system login nodes is provided via SSH and the ThinLinc remote desktop solution.

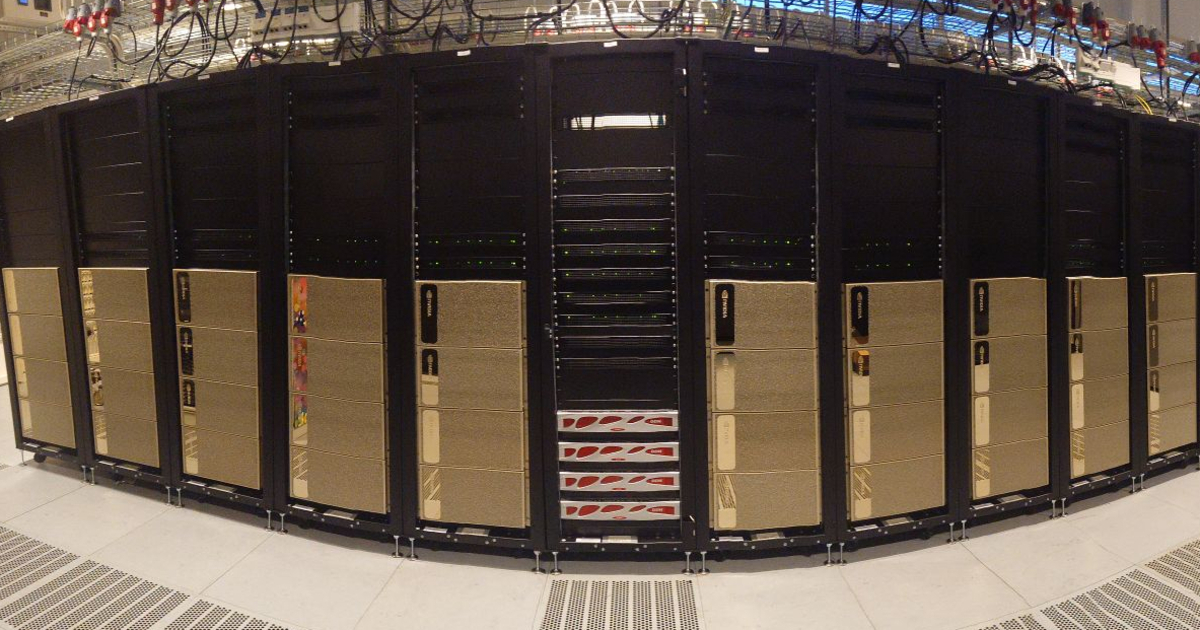

Berzelius Ampere

Berzelius Ampere (formerly known as Berzelius) is an NVIDIA® SuperPOD consisting of 94 NVIDIA® DGX-A100 compute nodes supplied by Atos/Eviden and 8 CPU nodes also supplied by Eviden. The original 60 “thin” DGX-A100 nodes are each equipped with 8 NVIDIA® A100 Tensor Core GPUs, 2 AMD Epyc™ 7742 CPUs, 1 TB RAM and 15 TB of local NVMe SSD storage. The A100 GPUs have 40 GB on-board HBM2 VRAM. The 34 newer DGX-A100 nodes “fat” are each equipped with 8 NVIDIA® A100 Tensor Core GPUs, 2 AMD Epyc™ 7742 CPUs, 2 TB RAM and 30 TB of local NVMe SSD storage. The A100 GPUs have 80 GB on-board HBM2 VRAM. The CPU nodes are each equipped with 2 AMD Epyc™ 9534 CPUs, 1.1 TB RAM and 6.4 TB of local NVMe SSD storage.

Fast compute interconnect is provided via 8x NVIDIA® Mellanox® HDR per DGX connected in a non-blocking fat-tree topology. In addition, every node is equipped with NVIDIA® Mellanox® HDR dedicated storage interconnect.

All nodes have a local disk where applications can store temporary files. The

size of this disk (available to jobs as /scratch/local) is 15 TB on “thin”

nodes, 30 TB on “fat” nodes, and 6.4 TB on CPU nodes, and is shared between

all jobs using the node.

Berzelius Hopper

The latest phase of the Berzelius service is Berzelius Hopper. Berzelius Hopper consist of 16 NVIDIA® DGX-H200 compute nodes supplied by Eviden and 8 CPU nodes also supplied by Eviden.

The DGX H200 are equiped with 8 NVIDIA® H200 141GB GPUs, 2 Intel® 8480C CPUs, and 2.1 TB RAM. The CPU nodes are each equipped with 2 AMD Epyc™ 9534 CPUs, 1.1 TB RAM and 6.4 TB of local NVMe SSD storage. The DGX H200 nodes are connected to a fast interconnect with 8x NVIDIA® Mellanox® NDR per DGX in a non-blocking fat-tree topology. This is a separate interconnect from that which connects the DGX A100 nodes in Berzelius.

All nodes have a local disk where applications can store temporary files. The

size of this disk (available to jobs as /scratch/local) is 30 TB on H200 nodes, and 6.4 TB

on CPU nodes, and is shared between all jobs using the node.

Berzelius Hopper is accessed through a new set of login nodes separate from those in the original Berzelius installation and also has new servers for other supporting tasks.

Berzelius Storage

Shared, central storage accessible from all compute nodes of the cluster is provided by a storage cluster from VAST Data consisting of 8 CBoxes and 3 DBoxes using an NVMe-oF architecture. The storage servers are connected end-to-end to the GPUs using a high bandwidth interconnect separate from the East-West compute interconnect. The installed physical storage capacity is 3 PB, but due to compression and deduplication this will be higher in practice.

User Area

User Area